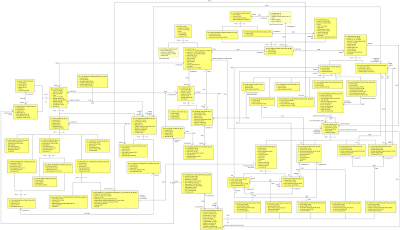

I've been working on the software architecture of the Chess Tool application for the last two weeks. I've also been trying to stay warm given the cold snap here in Colchester. I don't ever remember seeing snow in November before! Anyway, I going to discuss the Values subsystem of the software architecture in this blog. The latest OIM is given below:

The subsystem originally started off with a small set of data value subtypes but has expanded over time to include most of the data values defined in the Data Type subsystem of the OOA of OOA. However, I have discovered some issues with how I defined data values in the OOA of OOA which I will mention as I discuss each subtype below.

Undefined Value is implemented as a Java enum with a single UNDEFINED value. It is useful when dealing with generic values. However, it is not that useful generally since most assignment targets can (and should) be specifically typed. For example, a numeric value attribute will be typed as a NumericValue and if conditional then undefined is represented using null or an associated value status set to UNDEFINED.

Boolean Value is also implemented as a Java enum with FALSE and TRUE values. In my OOA of OOA, I also allowed users to define false and true aliases. However, on reflection that was a bad idea since Action Language code that uses the aliases can hide bugs where a user thinks an alias is TRUE when in fact it is actually FALSE. Instead, users should use enumerated values instead of aliased boolean values.

Enumerated Value design has undergone a number of iterations. The first requirement was to be able to map enumerated types/values to Java enum's which implement Specific Enumerated Type and Specific Enumerated Value interfaces. These values are associated with static enumerated type information allowing them to be used statically within generated code. I supplemented this with a Qualified Enumerated Value allowing a system dependent type to be associated with an enumerated value. However, such values can't be used statically within generated code. Later I added the concept of a Generic Enumerated Value which is enumerated type independent. It can be matched against any enumerated value with the same legal value name. However, generic enumerated values are not ordered and so can't be used in relational comparisons (unless a promotion to a typed value is possible).

Symbolic Value encapsulates Java String values. However, Java string length is based on 16 bit character counts for backwards compatibility, not Unicode character counts. I prefer to use actual Unicode character counts instead.

Numeric Value has numerous subtypes which have evolved considerably. They are shown on the OIM in promotion order from left to right. Automatic ID Value encapsulates non-negative Java int values (no time unit). Limited Integer Value encapsulates Java long values. Integer Value encapsulates Java BigInteger values. Decimal Value encapsulates Java BigDecimal values. Rational Value encapsulates a numerator/denominator pair allowing lossless division and the representation of negative zero, NaN, Infinity and -Infinity values. Rational Interval Value encapsulates a rational interval with a lower endpoint numerator, an upper endpoint numerator and a shared endpoint denominator. This is a more specialized numeric value not often supported in traditional programming languages.

The final subtype is Floating Point Value which encapsulates Java double values. Such values are normally approximate values since underflow and overflow is handled using approximation without user intervention. For this reason, all such values are prefixed with '~'. Once a numeric value is promoted to a floating point value, it is never narrowed without user intervention since all previous subtypes are assumed to be exact values while floating point values are assumed to be approximate.

All of the above subtypes handle negative zero consistently. However, whether a negative zero is preserved after been assigned to an assignment target (attribute, variable etc.) depends on the numeric type of that target. Most programming languages only handle negative zero in floating point arithmetic. However, I believe multiply and divide should be defined consistently across all numeric values, i.e. result sign is negative only and always if operand signs differ. However, negative zero is invisible when comparing numeric values. It only has a significant impact when it is used as a divisor resulting in a signed infinity (assuming dividend non-zero).

Rounding modes are associated with numeric types not numeric values. However, most programming languages perform rounding immediately when integer divide is performed. I prefer to promote the result of a division with a non-zero remainder to a rational value and leave any rounding to when the result is assigned to a target with an associated numeric type. Obviously, the remainder '%' operator doesn't make any sense without immediate rounding to determine the remainder, i.e. a lossless remainder operation always returns zero! Lossless (or lazy) division can have a significant impact on how code executes if the user assumes implicit immediate rounding. However, it should be possible for an analyst to change a numeric type without reworking Action Language code so inconsistent arithmetic rules across numeric types is something that should be avoided.

Numeric value also supports an optional time unit allowing duration values to be defined. This concept may be extended in future to support other unit types. However, allowing time units now helps develop an understanding of how units can be supported in the future. Subtracting a time from another time results in a duration. Performing arithmetic on two durations requires the duration time units to be the same. This can be done automatically except where one operand is DAY or smaller and the other is MONTH or larger since you can't convert durations between days and months outside the context of specific times.

Time Value is no longer a numeric value since most arithmetic operations don't make sense. Only subtraction of time values is allowed. I originally defined time values as numeric values in my OOA of OOA. An optional time unit is associated with time values so that when times are subtracted, the time unit of the resulting duration can be determined. The time unit is also used when converting time values into numeric magnitudes. Underlying time values are represented in UTC time relative to the Java Epoch ('1970-01-01 00:00:00'). An optional time zone is also associated with time values for determining calendar specific component values such as year, month, day etc. However, time unit and time zone are not preserved during assignment to a time typed target since that target will specify it's own preferences.

Handle Set Value allows object instance subsets to be represented. They are created from object instance lookups and relationship navigations. All non-empty handle sets are backed by the full set of object instances (which acts as the universal set) so that absoluteComplement() can be invoked on such values. Empty handle sets are another matter. A Qualified Handle Set Value allows backed empty sets to be created but such values can't be used statically since they are system dependent. That was why I introduced a Specific Empty Handle Set Value which allows an empty set to be statically defined for handle sets with specific object/class names. I also defined a Generic Empty Handle Set Value for situations where the object name is unknown. However, specific and generic empty sets have no associated universal sets so absoluteComplement() can't be applied to them.

I initially left handle sets unordered. However, most set operations (contains, union, intersection, difference) are much quicker when the sets are consistently ordered. To make consistent ordering possible, I now define a system-wide fixed ordered automatic ID literalID on all object instances. Such an ID is easy to allocate and defines a run-time ordering across all object instances. I also use this ID when formatting handle set literals. However, this ID is not persisted across runtimes.

Composite Value allows structured values to be created with readable but non-writeable components. Such values are discussed in xtUML02. I wasn't going to support composite values initially. However, they have immediate application as input/output parameter sets. They can also be used to create object instance snapshots when necessary. I don't currently allow composite values with no components.

List Value allows generic value lists to be created and iterated over. Such values should never be associated with attributes since they should never have a multiplicity of many. However, they may be created and passed as parameters or event data items since we allow a multiplicity of many on parameters in general. More commonly, they are useful within Action Language operation bodies where processing has been divided into stages. I'm not sure there are any benefits allowing other collection types (e.g. Bag, Set and OrderedSet) since their use is strictly temporary and localized.

I've run out of time so I won't go into predefined operations today or literal string syntax. Next time, I will also discuss the Types subsystem which sits above the Values subsystem.